Unaligned Video-Text Pre-training using Iterative Alignment

Abstract

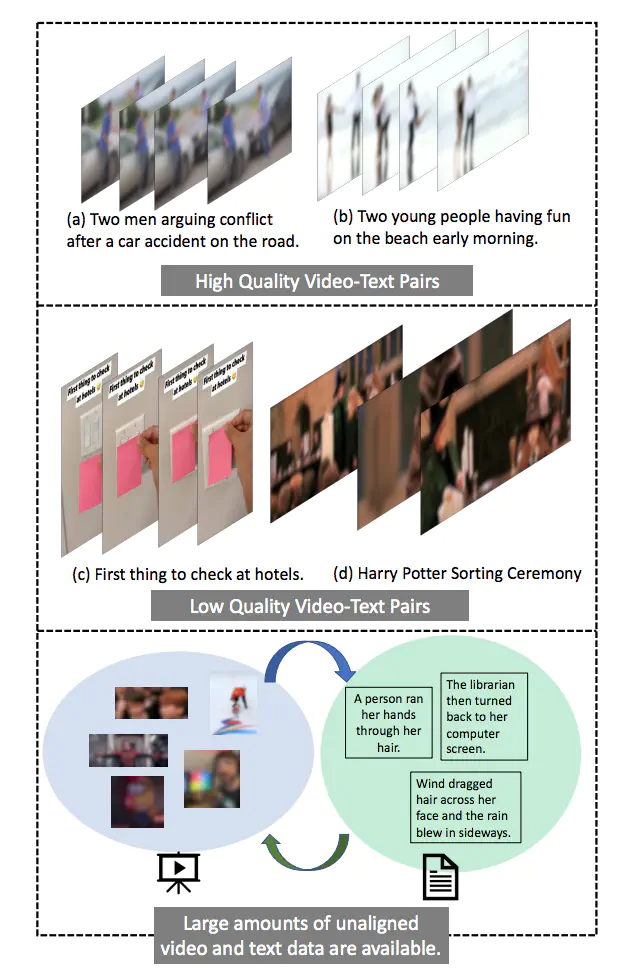

Existing state-of-art vision-language models follow the widely-used recipe of pre-training on a large corpus of image-text pairs followed by fine-tuning on one or more downstream tasks. Similar methods have also been shown to be successful in video-language tasks. However, such pre-training schemes are inherently restricted by the availability of large-volume of high-quality paired video captions, often only found in particular video domains such as stock footage or instructional videos. To address this limitation, we explore utilizing unaligned vision and text corpora with two distinct advantages - (i) access to orders of magnitude more unaligned data (ii) such unaligned data can be obtained for diverse domains. We show that our proposed iterative alignment method to perform alignment between vision and language modalities in the pre-training step can significantly improve downstream task performance compared to no pre-training setup. Experiments on multiple diverse video-language benchmarks validate the effectiveness of our approach.